Hi! I'm a 2nd year Ph.D. Student in Computer Science at the University of Illinois Urbana Champaign, where I am advised by Prof. Derek Hoiem. I like to build general purpose and adaptable vision and robotics systems, particularly models that can continually acquire new skills and concepts over time.

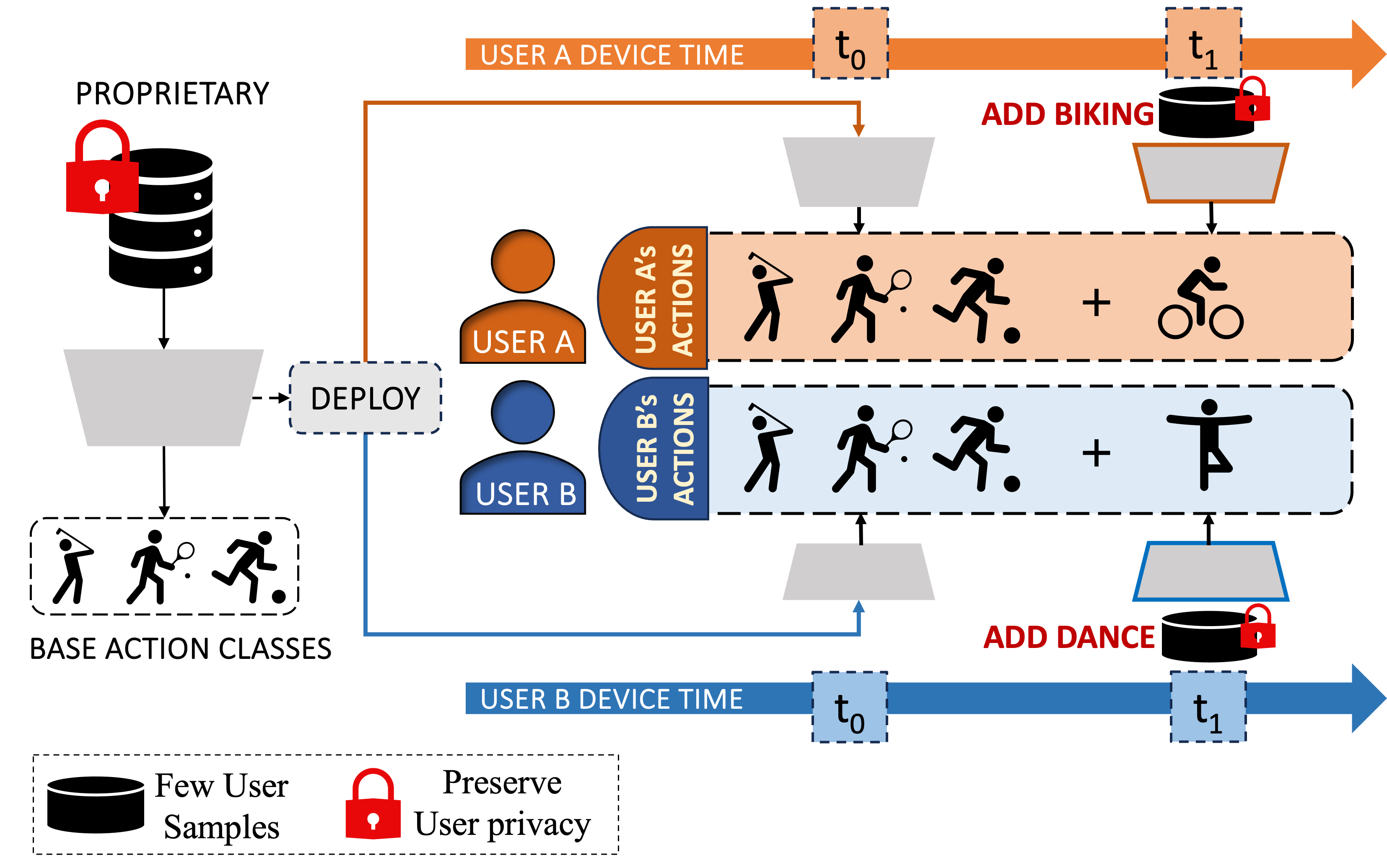

Previously, I finished my Masters of Science in Robotics from Carnegie Mellon University. For my thesis research, I worked on continual personalization of human action recognition advised by Prof. Fernando De La Torre and in collaboration with Meta Reality Labs, XR Input Team. I spent summer 2024 building the multi-view camera-LiDAR 3D perception pipeline for the US Department of Transportation, Safe Intersection Challenge with Prof. Srinivasa Narsimhan. I interned at Waymo LLC, Semantics Understanding Team in summer 2025.

In the past, I have had wonderful opportunities working with Prof. Vineeth N Balasubramanian (CMU and IIIT-Hyderabad), Prof. C V Jawahar (IIIT-Hyderabad), and Prof. Frederic Jurie (in beautiful Normandy, France).

Recent Publications

|

POET: Prompt Offset Tuning for Continual Human Action AdaptationECCV 2024, Oral Presentation (2.32%) |

|

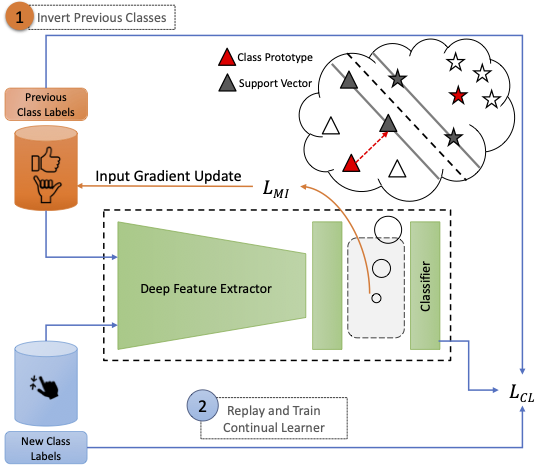

Data-Free Class-Incremental Hand Gesture RecognitionICCV 2023 |

|

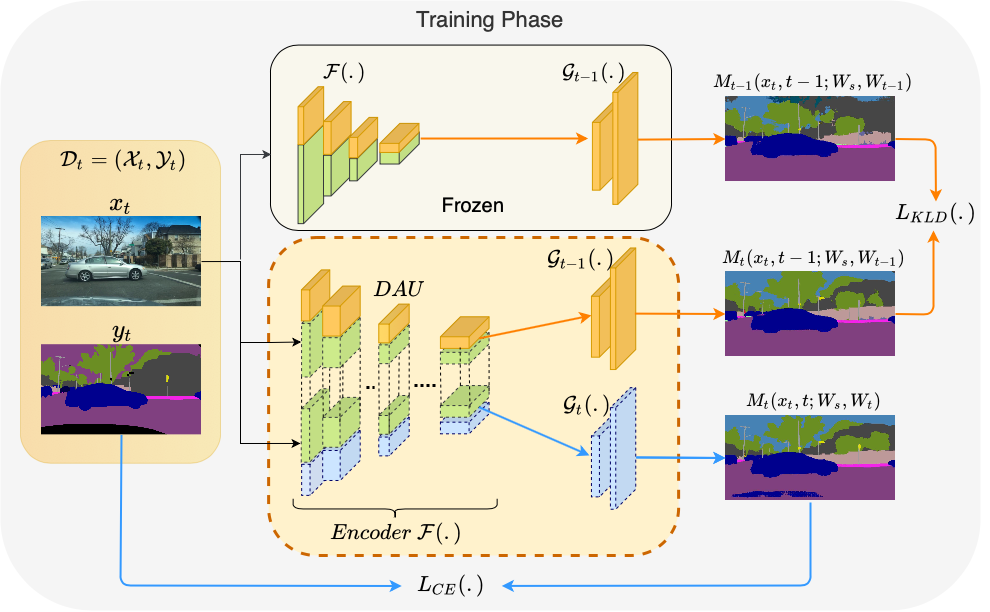

Multi-Domain Incremental Learning for Semantic SegmentationWACV 2022 |

News

Selected Research Projects

|

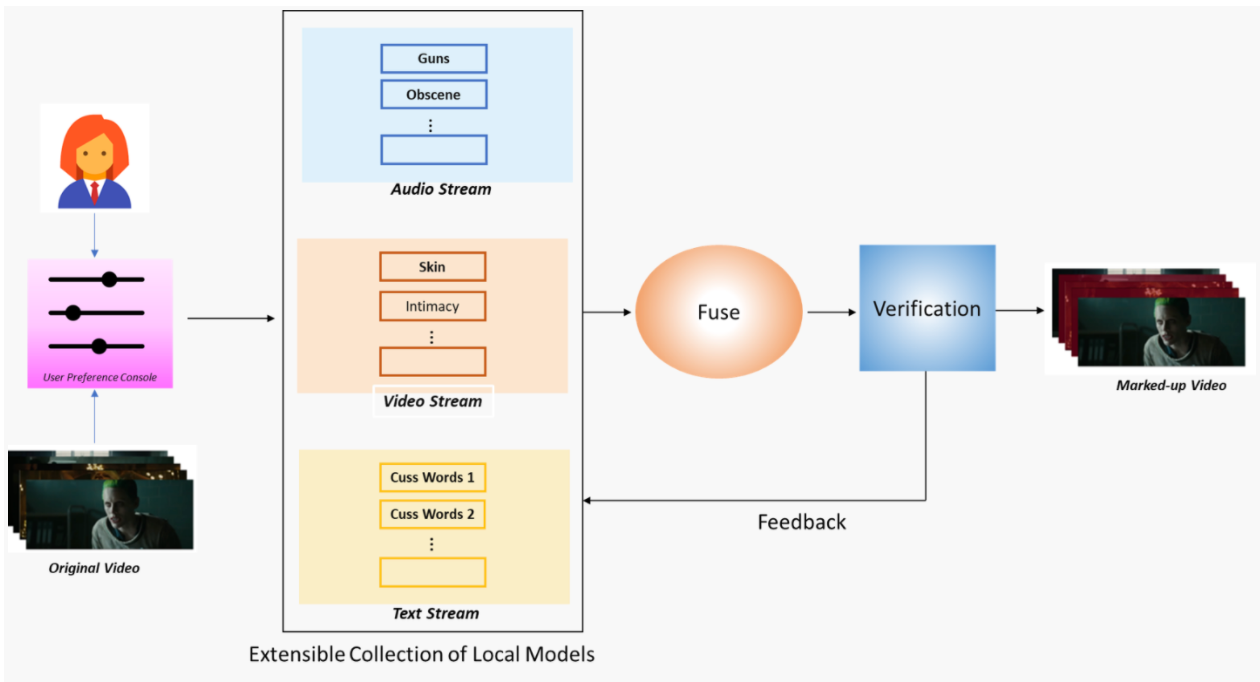

Towards an AI Infused System for Objectionable Content Detection in OTT [IBM Research Laboratory]With the substantial increase in the consumption of OTT content in recent years, personalized objectionable content detection and filtering has become pertinent for making movie and TV series content suitable for family or children viewing. We propose an objectionable content detection framework which leverages multiple modalities like (i) videos, (ii) subtitle text and (iii) audio to detect (a) violence, (b) explicit NSFW content, and (c) offensive speech in videos. |

|

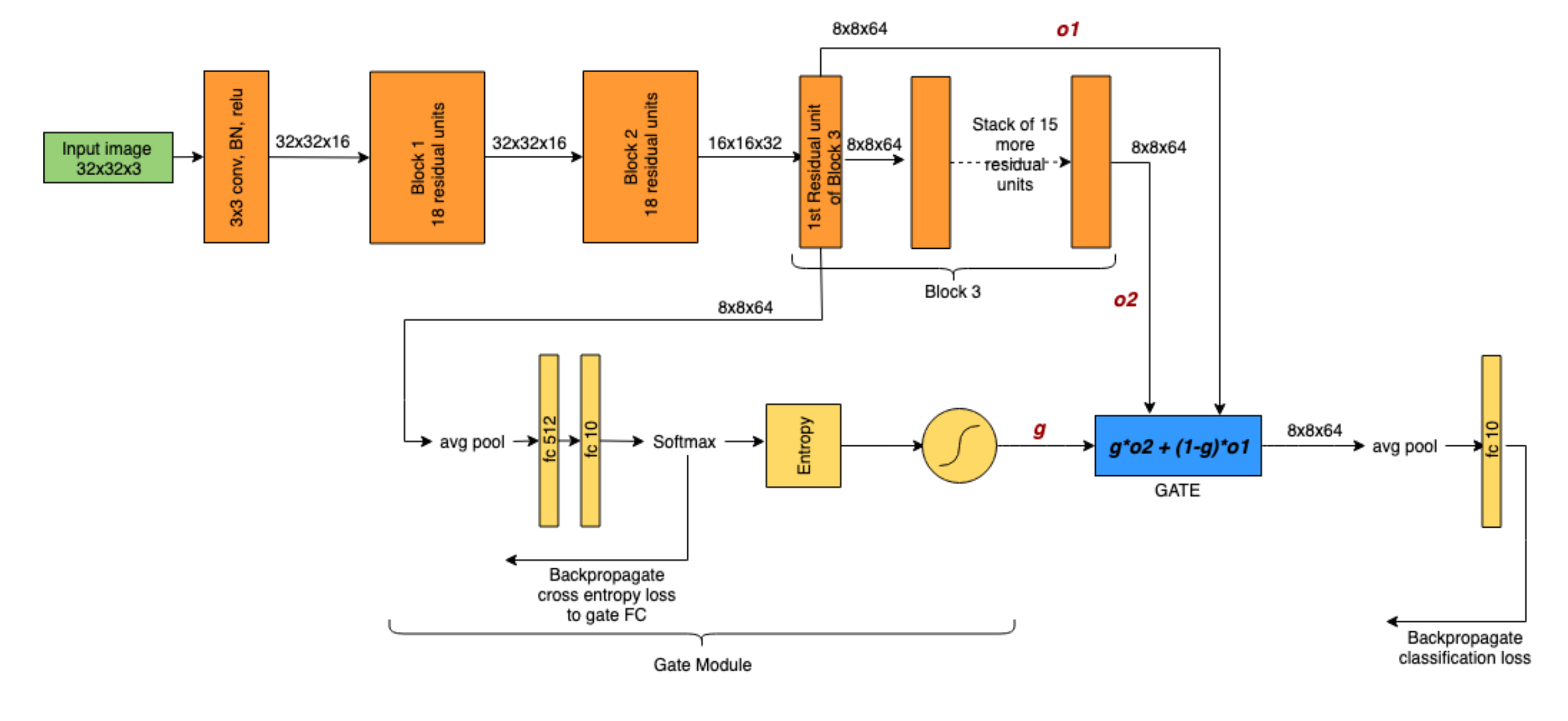

Memorization and Generalization in CNNs using Soft Gating Mechanisms [Image Team GREYC, University of Caen Normandy]Technical Report / Code / Technical Report, Suboptimal ResNet Gating Mechanisms A deep neural network learns patterns to hypothesize a large subset of samples that lie in-distribution and it memorises any out-of-distribution samples. While fitting to noise, the generalisation error increases and the DNN performs poorly on test set. In this work, we aim to examine if dedicating different layers to the generalizable and memorizable samples in a DNN could simplify the decision boundary learnt by the network and lead to improved generalization in DNNs. While the initial layers that are common to all examples tend to learn general patterns, we dedicate certain deeper additional layers in the network to memorise the out-of-distribution examples. |